As Nvidia CEO Jensen Huang steps onto the stage this week for the company’s annual software developer conference, he will address growing concerns over artificial intelligence (AI) costs and competition. The tech giant, now valued at nearly $3 trillion, has seen its dominance challenged as industry players seek more efficient AI solutions.

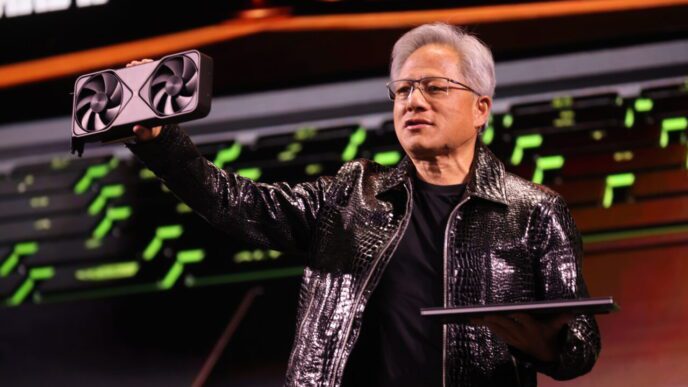

The conference follows a market shake-up caused by China’s DeepSeek, which introduced a competitive chatbot requiring significantly less computing power than its rivals. Nvidia’s stock took a hit, as its business model heavily depends on selling high-powered chips, some costing tens of thousands of dollars. These chips have been instrumental in driving the company’s revenue to $130.5 billion in just three years.

Introducing the Vera Rubin Chip System

One of the major highlights expected at the conference is the unveiling of Nvidia’s latest chip system, Vera Rubin, named after the astronomer who helped identify dark matter. This system is set for mass production later this year, despite production delays affecting its predecessor, the David Blackwell chip. These setbacks have impacted Nvidia’s profit margins, adding pressure to deliver on its next-generation hardware.

Shifting AI Markets: Training vs. Inference

Nvidia remains dominant in AI model training, holding over 90% of the market. However, the industry is evolving, shifting focus from AI training—where models learn from massive datasets—to AI inference, the process of applying learned intelligence to generate responses. While Nvidia is the clear leader in training, it faces increased competition in inference computing, where companies are striving to deliver cost-efficient solutions.

A Battle Over AI Efficiency

AI inference can range from simple smartphone tasks, like email auto-suggestions, to large-scale financial data analysis in data centers. Numerous Silicon Valley startups and established competitors such as Advanced Micro Devices (AMD) are developing chips that aim to lower inference costs—particularly electricity consumption. Nvidia’s chips require so much power that some AI firms are even considering nuclear energy as a solution.

Bob Beachler, Vice President at Untether AI, one of at least 60 startups aiming to disrupt Nvidia’s inference market, commented: “They have a hammer, and they’re just making bigger hammers. They own the training market, but every new chip they launch carries training baggage.”

However, Nvidia argues that AI reasoning, a new technology where chatbots generate and review their responses before providing a final answer, will work in its favor. This reasoning process increases computing demand, aligning with Nvidia’s strength in high-powered AI chips.

Future Growth Potential in AI Inference

According to Jay Goldberg, CEO of D2D Advisory, inference computing is poised for massive growth. “The inference market will be many times larger than the training market. Nvidia’s market share may decline, but the overall revenue pool could expand significantly.”

Beyond AI Chatbots: Quantum Computing and Robotics

Nvidia is also set to reveal insights into quantum computing, an area that Huang previously suggested was still decades away. His comments in January triggered stock declines in quantum computing firms and pushed companies like Microsoft and Google to fast-track their claims of impending breakthroughs. In response, Nvidia has dedicated an entire day at its conference to discuss the quantum industry’s future and its role in it.

Another focus will be Nvidia’s push into robotics. The company aims to apply AI advancements to create smarter, more autonomous robots, leveraging similar techniques used in chatbot development.

The Personal Computing Market: Nvidia vs. Intel

Nvidia is also expanding into personal computer central processor chips (CPUs), a project first revealed by Reuters in January. With this move, the company could challenge Intel’s remaining market share, further cementing its influence in the computing industry.

Industry analyst Maribel Lopez weighed in, stating: “If successful, Nvidia’s CPU efforts could erode what’s left of Intel’s market dominance.”

Huang’s keynote address on Tuesday will provide critical insights into Nvidia’s strategy to maintain its AI leadership. With competition intensifying and market shifts underway, all eyes will be on how Nvidia adapts to these emerging challenges while continuing to shape the future of AI and computing.