As artificial intelligence continues to make breakthroughs in a variety of industries, companies at the forefront of the development of AI models are embracing a new trend: making their systems run more efficiently. This shift comes on the heels of a pivotal moment in January when China’s DeepSeek released open-source AI software that shocked the tech world by outperforming models from giants like OpenAI and Google at a fraction of the cost. This move forced many AI companies to reassess their approaches to AI development, particularly in terms of the computing power required to run their models.

Over the last few months, several leading companies in the AI space have embraced a “less-is-more” philosophy, focusing on reducing the number of chips needed to power their systems without sacrificing performance. This shift toward efficiency is not just about cutting costs—it’s also about improving accessibility and scalability for businesses that require advanced AI capabilities but have limited access to high-powered hardware.

Cohere and Google Lead the Charge

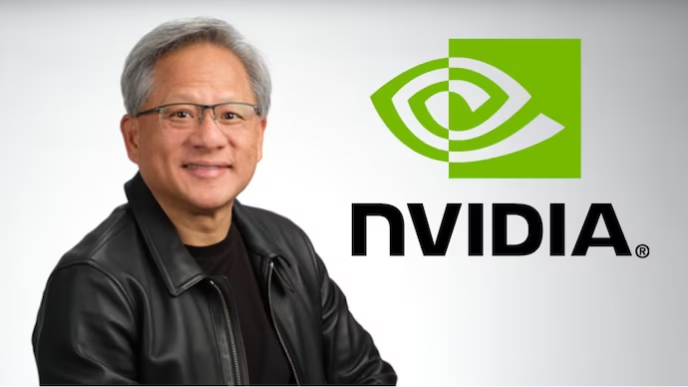

Among the trailblazers in this new trend are Cohere Inc. and Google, both of which have recently introduced models that run on fewer chips while delivering comparable or even superior performance to larger systems. On Thursday, Cohere is set to unveil a new model called Command A, which can perform complex business tasks using just two Nvidia A100 or H100 chips. This marks a stark contrast to some of the largest AI models, which require far more chips to function. According to reports, even DeepSeek’s most recent system would need more chips than Cohere’s Command A model, highlighting the significant potential for efficiency gains in the AI space.

A day before Cohere’s announcement, Google also made waves by revealing its new series of Gemma AI models, which, according to the company, can operate effectively on a single Nvidia H100 chip. This is another example of the growing trend of AI companies reducing their reliance on large-scale hardware in favor of more efficient systems. Google claimed that its Gemma AI models rivaled or even outperformed DeepSeek’s AI software in certain tasks, further fueling the conversation about efficiency and performance in AI development.

Both companies’ announcements have drawn attention to the fact that high-performance AI systems don’t necessarily require vast amounts of computational power. Instead, by optimizing the use of available resources, these companies are demonstrating that it’s possible to develop advanced AI models that are both effective and efficient.

The DeepSeek Effect

The timing of these announcements from Cohere and Google is no coincidence. In January, DeepSeek’s open-source AI software shocked the industry by delivering performance on par with or exceeding the capabilities of models from well-established companies like Google and OpenAI, all while being built at a fraction of the cost. This was made possible by DeepSeek’s innovative approach to chip usage, which focused on maximizing the capabilities of existing hardware rather than relying on more powerful—and more expensive—chips.

DeepSeek’s success has caused ripples throughout the AI industry, forcing companies to reevaluate the traditional approach to AI development. For years, the prevailing assumption in AI was that more powerful hardware and larger models were the key to better performance. However, DeepSeek’s success has proven that it’s possible to achieve impressive results with fewer resources. This revelation has made companies like Cohere and Google rethink their strategies and move toward creating AI systems that are not only more effective but also more cost-efficient.

Cost-Efficiency Becomes a Key Focus

The rise of DeepSeek has undoubtedly reshaped the conversation around AI development. Prior to DeepSeek’s breakthrough, many AI companies were primarily focused on scaling up their models by adding more chips and increasing computational power. This approach led to ever-growing data centers and sky-high costs associated with developing and running AI systems. However, the success of DeepSeek has demonstrated that there may be another path forward—one that prioritizes efficiency over raw computational power.

For companies like Cohere, the shift toward more efficient AI models is not just about cutting costs—it’s also about making advanced AI more accessible to a wider range of customers. Many businesses, especially smaller ones, have limited access to high-powered computing infrastructure. By designing models that run on fewer chips, companies can help democratize access to AI, enabling businesses with fewer resources to leverage the power of AI in their operations.

A Wake-Up Call for the Industry

Industry experts, including Aidan Gomez, the co-founder and CEO of Cohere, have hailed DeepSeek’s success as a “wake-up call” for the AI industry. Gomez argued that the attention DeepSeek has received has highlighted the inefficiencies that have long existed in the way many companies develop and deploy AI systems. “It was a very healthy wake-up call,” he said in a recent interview, acknowledging that the industry has been too focused on scaling up models without fully considering how to optimize their performance using fewer resources.

Gomez believes that DeepSeek’s rise has had a positive impact on the industry by showing that it is possible to build cutting-edge AI models without relying on massive amounts of computational power. This realization has pushed companies like Cohere to invest more in creating AI models that are not only high-performing but also efficient and cost-effective. According to Gomez, this shift could lead to significant changes in the way AI is developed and deployed, making it more accessible to a wider range of businesses and organizations.

Cohere’s Focus on Business AI

For Cohere, which was recently valued at $5.5 billion, the move toward more efficient AI models is especially important. Cohere focuses on providing AI solutions for business applications, which means that its customers often face limitations in terms of computing power. By developing AI models that require fewer chips to run, Cohere is better positioned to meet the needs of business customers who may not have the same access to advanced computing infrastructure as large tech companies.

This approach has significant advantages for businesses. AI models that run on fewer chips are not only cheaper to build and maintain but also easier to deploy at scale. As businesses increasingly turn to AI to streamline operations and drive innovation, models that are both efficient and scalable will be crucial to meeting the growing demand for AI-powered solutions.

The Future of AI Efficiency

The trend toward more efficient AI models is still in its early stages, but it’s clear that this shift has the potential to reshape the AI landscape. As companies like Cohere and Google continue to lead the charge, we can expect more AI firms to follow suit by focusing on efficiency without sacrificing performance. The success of DeepSeek has demonstrated that advanced AI systems don’t have to be built on massive hardware infrastructure, and this realization could pave the way for a new era of AI development—one that is more accessible, cost-effective, and sustainable.

As the AI industry moves toward this more efficient future, it’s likely that we will see even more innovations in how AI models are designed and deployed. With fewer resources needed to power these systems, companies will be able to deliver AI solutions that are not only powerful but also more widely available to businesses and organizations of all sizes.

The AI industry is undergoing a significant transformation, driven in part by DeepSeek’s success and the growing recognition that efficiency is just as important as raw computational power. Companies like Cohere and Google are leading the way by developing AI models that run on fewer chips while maintaining high performance. This shift toward more efficient AI systems could have far-reaching implications for the future of AI, making it more accessible and cost-effective for businesses around the world. As the industry continues to evolve, the focus will likely shift from sheer scale to smarter, more efficient solutions that benefit both developers and end-users alike.